AI Transparency on FHIR, published by HL7 International / Electronic Health Records. This guide is not an authorized publication; it is the continuous build for version 1.0.0-ballot built by the FHIR (HL7® FHIR® Standard) CI Build. This version is based on the current content of https://github.com/HL7/aitransparency-ig/ and changes regularly. See the Directory of published versions

| Page standards status: Informative |

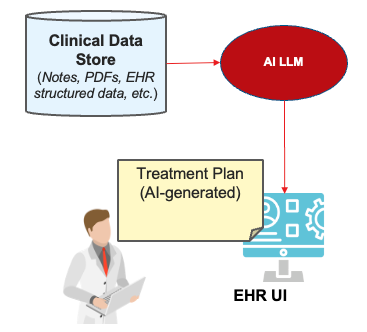

Clinicians reviewing previous treatment plans must distinguish between content inferred by AI, decisions assisted by AI, and those made without AI involvement. This parallels standard practice of identifying the human clinician responsible for treatment plans, their role in development, and their clinical background.

Moreover, the use case can be expanded to different roles and contexts, as shown in the table below:

Data Viewing Questions by Actor

| When this Actor is Viewing Data | The key questions may be… |

|---|---|

| Clinician | What is happening (to modify)? Why is it happening? |

| Payor | What matches prior authorization criteria? |

| QI | What matches the desired outcomes, or desired approach to care? |

| Safety Board | Multiple questions for root cause analysis |

| Legal | Who is responsible? |

When AI trains on clinical content, it should avoid training on data that was generated by AI.

When in the course of using a given AI model, it may be come understood that that given AI model was making poor or dangerous outputs. Therefore one needs to discover all the instances where a Patient may have been impacted by this given AI model.

All outputs from a given AI model would have traceability back to a single Device resource that describes that given model. Thus one needs to trace to all resources with a Provenance.agent referencing that Device instance, and should trace to all resources otherwise referenceing that Device instance (e.g. Observation.device, Composition.author).

When in the course of using AI, it is common to reuse inputs, such as prompts, that are believed to produce "good" inference, but at a later time realize that the input is flawed and results in poor AI or dangerous output. Therefore one needs to discover all the instances where a Patient may have been impacted by this input.