ACT-NOW Implementation Guide

0.4.0 - ci-build

ACT-NOW Implementation Guide

0.4.0 - ci-build

ACT-NOW Implementation Guide, published by Te aho o te kahu - Cancer Control Agency. This guide is not an authorized publication; it is the continuous build for version 0.4.0 built by the FHIR (HL7® FHIR® Standard) CI Build. This version is based on the current content of https://github.com/davidhay25/actnow/ and changes regularly. See the Directory of published versions

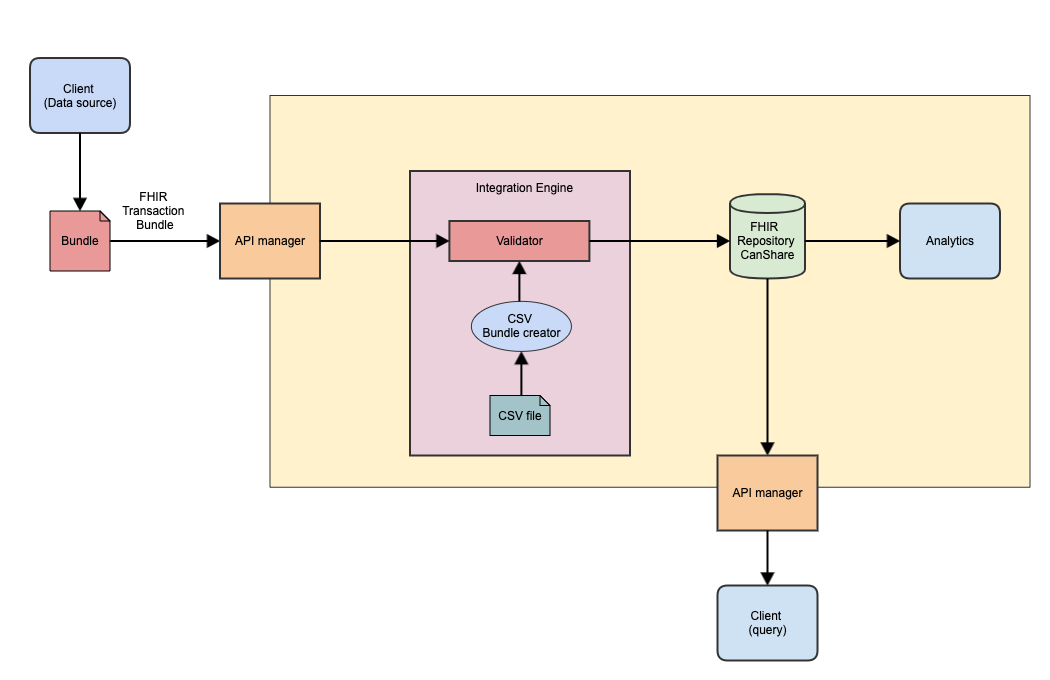

The systems is designed as a data aggregator - accepting data from a small number of systems (it is a highly specialised domain) and saving it into a FHIR server as patient specific information. Data is then available for use by other systems, including analytics and potentially care delivery.

It is assumed that the CanShare server will only hold resources used by the system - ie it ios not a shared server.

This guide does not include security in any detail.

The overall architecture of the proposed solution is as follows:

(todo: not sure about the csv input…)

This is the source of ACT-NOW data into the repository. It is submitted as FHIR resources in a transaction bundle at regular intervals, likely daily in the first instance. The bundle will be validated before being accepted. If it fails validation, then it will need to be corrected in the source system and re-submitted.

The interface between external systems and the internal CanShare systems. It applies security and authentication / authorisation policies for both submitters of data and clients performing queries.

Given that there are only a small number of clients supplying data, there could be a separate endpoint for each client. This could help with provenance.

The validator is used to ensure that the data in the bundle is for for purpose. This occurs at a number of levels. (Link to details here)

If the bundle fails validation, then it will be rejected and will need to be correct and re-submitted by the sender. This is not only for clincial use but also to ensure that the analytics is being performed against valid data - it is much harder to correct once it has been imported into the FHIR server.

This requirement does bring support requirements that are being developed. Specifically, the order that bundles are submitted may be important, as some resources (particularly the Care Plans) are updated as part of the data acquisition. This means the most recent version of a resource needs to be submitted last.

This step could also add a Provenance resource to the bundle (it could be supplied by the originating system, though there is value in it being applied by the system.)

In this architecture, the validator function is performed by an Integration Engine - though other architectures are certainly feasible. However, it is assumed that the FHIR server is a ‘generic’ server and so will not be capable of the business level validation required.

A FHIR server that holds the data as FHIR resources - currently version 4.0.1. It must support a number of the RESTful API interfaces - the most important being conditional updates, and support of the ‘_since’ operator needed by the analytics toolchain. However, it is assumed that it will support the majority of the RESTful API for maximum flexibility for the query.

This represents the toolchain that ultimately updates the analytics repository - currently a snowflake instance. This tool chain will incrementally query the FHIR server for data.

A key premise of the architecture is that validation is applied at data acquisition as described above. Hence, it should not be needed here (though could be applied if needed)

Represents an external client querying the data - such as an MDM meeting. Security is applied by the API manager.

This is a component that create bundles from CSV files and submit them via the REST interface to the system. This is to support a legacy system that is capable of extracting data to csv, but not to FHIR.

The component is depicted as being part of the Integration Engine (and so inside the system and doesn’t necessarily need to go through the API manager), though it could be elsewhere.

There is a javascript component developed as part of the Reference Implementation that can do this action. It could serve as an example for development.

The CSV bundle creator may not be required, depending on business decisions.

There are 2 places where validation occurs - when the bundle is received for processing and when data is extracted for analytics

It is intended that all bundles are validated before being accepted using the standard FHIR validation process against the conformance artifacts in this guide.

to do - why is this needed here - examples as well…

This section summarizes the minimum requirements of the FHIR server - details of interactions are in the API Guides. This section assumes that the transaction with conditional update approach is used.

Refer to the CapabilityStatement for details.

Ideally the server will support the complete RESTful API defined by FHIR - as does the HAPI server for example. (The HAPi CLI is used by the reference implementation - though it is in no way suitable for a production deployment.)

The key features needed are:

Features that might be supported either by the server or some application ‘in front’ of it - like an Integration Engine